The project.

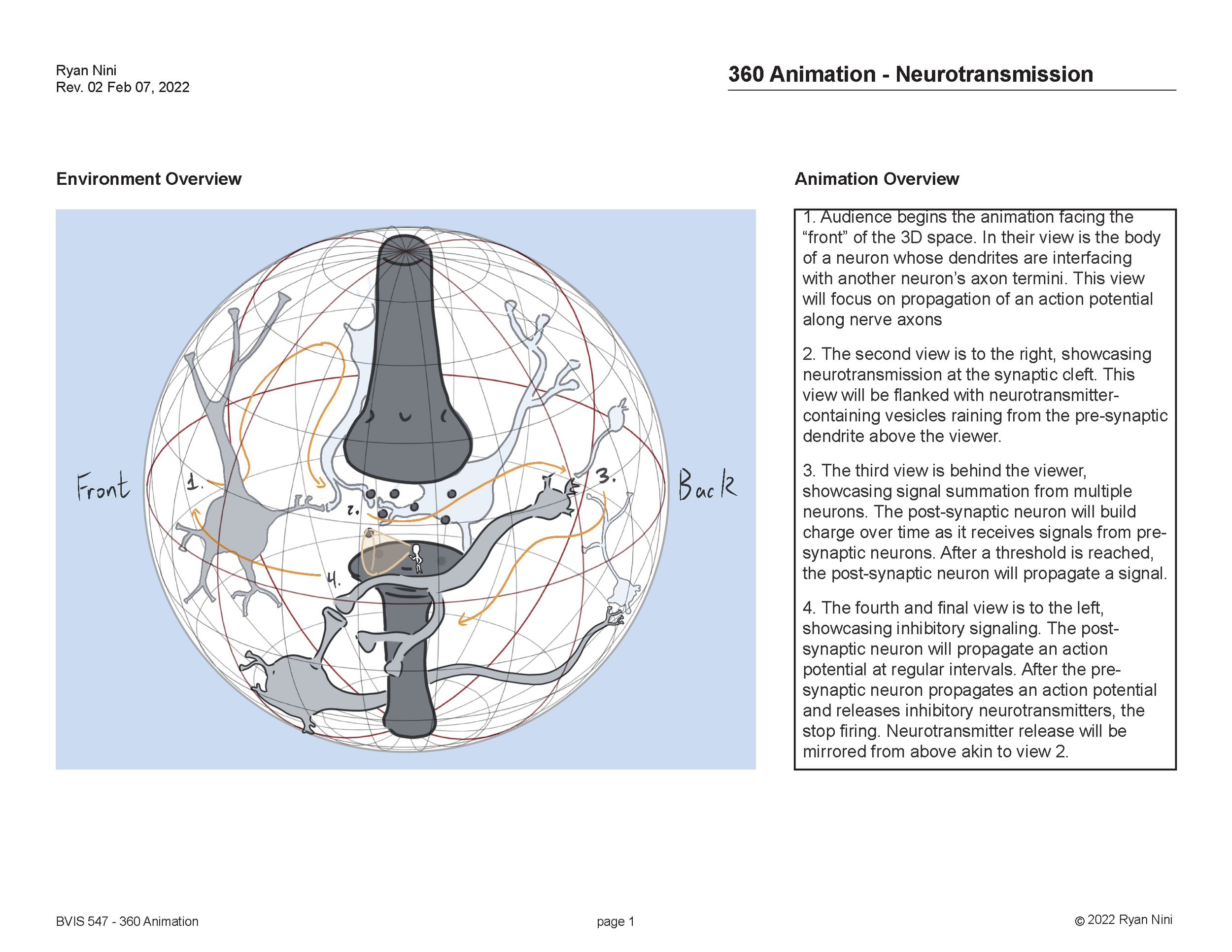

Neurotransmission is a complex biological complex topic typically taught at university-level courses through a series of still images accompanied by descriptive text. Existing media and references do not cater to lower levels of scientific literacy, presenting a considerable barrier of entry for lay audiences to learn about this fundamental process that plays a role in a number of neuropathologies. Immersive media is poised as a potent education tool to convey complex information in a visually and experientially engaging way, further supported by increasing accessibility of the medium through affordable headsets and utilization in public education spaces. The novelty of the media presents particular visualization challenges compared to traditional media (i.e. planar animations), including motion sickness and losing audience attention to low-priority elements/action. To address issues of motion sickness, the viewer is statically placed in a nerve synapse. Each view presents a particular facet of neurotransmission with guiding text call-outs that are animated to reinforce visual concepts, addressing issues of misdirected attention while giving visual space to simplify complex concepts into discrete representations. While the animation is a brief 30 seconds, the experience is designed to seamlessly loop so that audience members can take as much time to experience and absorb the educational content.

-

Lay audience, scientifically curious.

-

Identify the basic features of nerves

Understand the basic steps in neurotransmission

Identify general effects of neurotransmitters

-

VR-enabled smartphone, standalone headset, or 360-enabled video player

The plan.

The main focus of storyboarding for this project was to clearly establish spatial relationships and view flow. Given that viewers have the freedom to choose where to look, I wanted each view to convey learning content, while clearly establishing the needed motion to convey this information. This is reflected in the combined use of both planar projections and spherical representations of the environment.

The process.

Procedurally generating nerves with Geometry Nodes

The complex geometry of nerves and the specific shapes needed for my animation presented a design challenge. While sculpting each nerve by hand would be a straightforward, albeit tedious approach, I decided to experiment with geometry nodes. While developing the appropriate system took days of upfront work, the reward was a flexible system able to generate nerves from a collection of splines.

These splines, along with a dozen or so parameters, can be modified on the fly to iteratively generate nerves as needed. A modest amount of clean-up is needed, but the tool provided flexibility and power in generating most of the models in my scene.

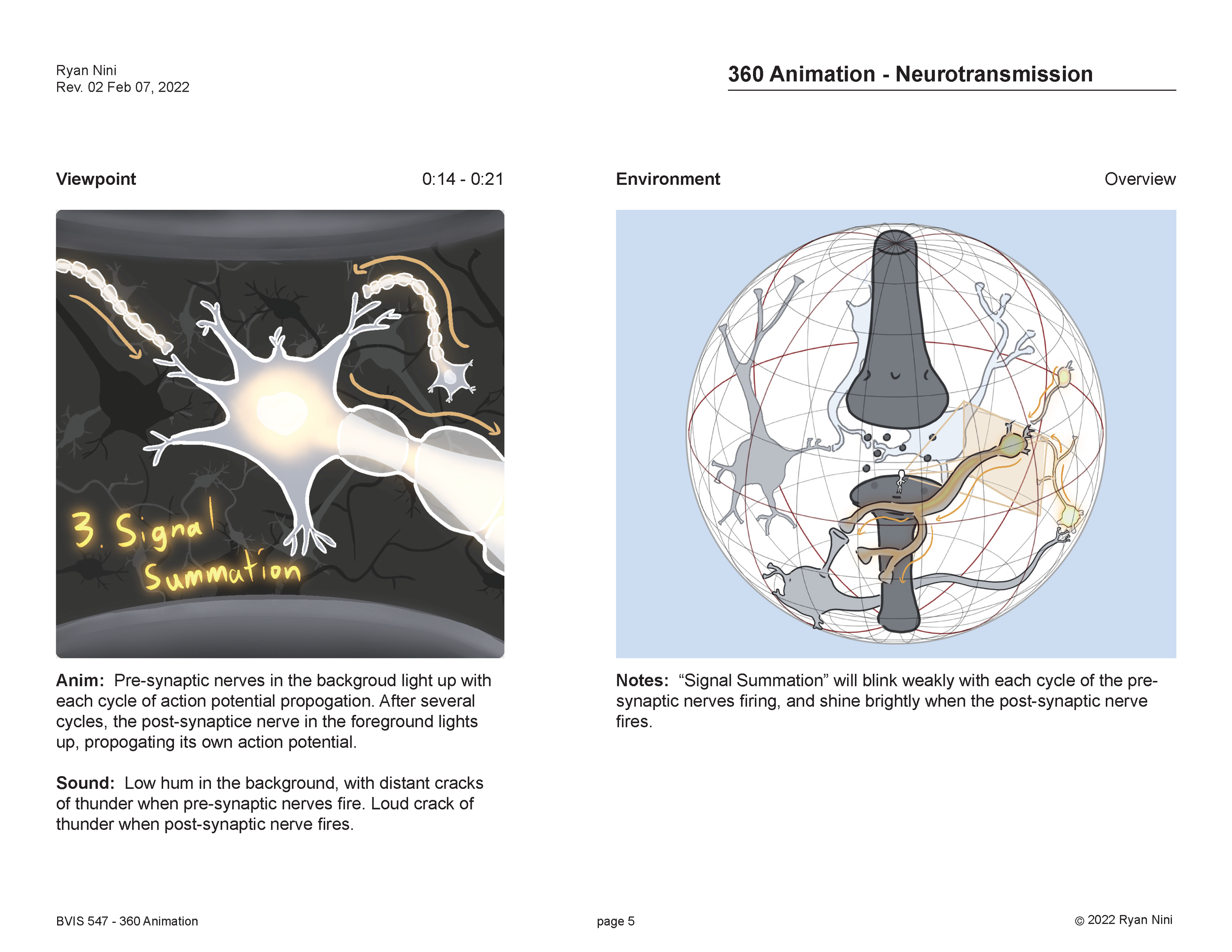

Simulating action potentials with Dynamic Paint Simulation

For my action potentials, I needed a way of propagating a material change across my nerve meshes. Dynamic paint simulation was an elegant solution. I could use a dummy object to apply values to the mesh faces based on proximity, which would change over time once the object moves away from each face. These values would then drive influence the intensity of the emission channel, giving the illusion of an action potential propagating down the length of the nerve.

While the initial simulations were quite hefty, these values could be baked to textures to keep animation and rendering performant.

Demonstrating neurotransmission with Particle Effects

Blender has a modest particle system that can interact with physics simulation. With a few tweaks to Brownian motion and some attraction forces, I was able to create a simple particle flow that felt organic yet followed nicely from pre-synaptic axon to post-synaptic dendrite. These systems could then be duplicated for multiple rounds of emission, simplifying the set up process.

Creating a flexible workflow through Rendering in Layers

Despite optimizing models, the entire scene quickly became hefty. Because the camera remained static, extra liberty was taken to split up the scene into layers. The furthest nerves would eventually be blurred and were not animated; this meant a single frame could be rendered to save time and resources. The added benefit of rendering in layers allowed for flexible compositing and adding secondary motion to objects.

Final polishing through Compositing

Each beauty render was accompanied with a collection of render passes (back-to-beauty passes, mist pass, objectID, etc.). Notable adjustments to the final footage included a bloom effect for action potentials, atmospheric perspective, camera blur, color grading, and turbulent displacement for secondary motion.

The payoff.

Loop the footage for a seamless, leisurely viewing experience. Gyroscope friendly and headset optional.